Within multi-tiered systems of support (MTSS) or response to intervention (RtI) a crucial first step to prevent the onset of more serious academic difficulties is to accurately and efficiently identify those students that need additional support before they fall further behind their peers. The QuALITY lab has engaged in research that has evaluated the technical adequacy of existing screening instruments and explored the viability of new metrics to quantify risk. In addition we have begun exploring novel approaches to conducting universal screening to better predict which students are in need of intervention. Much of this work has been conducted with Dr. David Klingbeil at the University of Wisconsin-Madison as well as Dr. Peter Nelson, director of research and innovation at ServeMinnesota, an AmeriCorps non-profit agency.

Technical Adequacy of Screening Instruments and Exploration of New Metrics

When evaluating student performance in reference to a benchmark, there are four possible outcomes. The student may actually be at risk and the screener correctly identifies them as such (True Positive), the student is truly not at-risk and the screener also predicts that the student is not at-risk (True Negative), the student is truly at-risk and the screener incorrectly identifies that the student is not-at risk (False Negative), or the student is truly not at-risk and the screener incorrectly identifies the student as at risk (False Positive). If many students are tested within a school system, the figure below captures the balance of these decision outcomes.

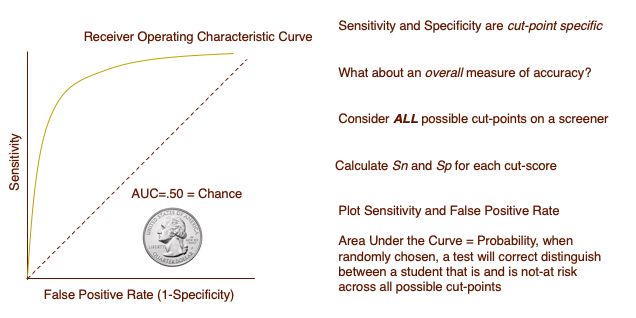

From this information we can evaluate the extent to which the test correctly identifies those students who are at-risk (sensitivity) as well as those students whom are truly not at-risk (specificity). In addition, we can explore the extent to which changing the cut-score student performance is compared to changes the sensitivity and specificity of our outcomes.

Test companies are expected to conduct and report diagnostic accuracy analyses for the measures they sell. However, it is important that independent verifications of proposed cut-scores are conducted by independent research groups with diverse samples. As such a number of research projects are underway in the QuALITY lab related to evaluating existing screening instruments used by schools within MTSS frameworks.

In addition to using traditional metrics to evaluate the performance of screening instruments, the QuALITY lab has explored the use of more novel metrics to capture the individual probability of risk a given student has of needing additional support considering not only their performance on a screener, but other relevant information (e.g., historical rate of failure in their school system, performance during the previous academic year, rate of improvement experienced during a previous intervention, etc.). The lab has explored the use of post-test probabilities using Bayesian analysis to better understand thresholds of risk to make more nuanced screening decisions. For example, rather than determining whether a student should or should not receive intervention, perhaps there is a probability of risk that suggests additional assessment is warranted. The use of post-test probabilities allows us to explore the viability of more nuanced decision making practices in regards to screening decisions.

Novel Approaches to Universal Screening

In line with exploring the viability of novel metrics to determine academic risk, the QuALITY lab has investigated novel approaches to conducting universal screening. Traditionally, educators would administer a single measure to determine student risk and make intervention recommendations. Doing so may yield an unacceptably large number of FP and FN cases. To combat this, one approach to limit the number of incorrect decisions is to supplement a single universal screening measure with another measure to assess risk.

Multivariate Screening

In multivariate screening, multiple indicators are combined together to create a composite score to signify risk. The QuALITY lab has been engaged in research related to the optimal weights assigned to different indicators as well as the relative value of collecting additional data versus using a single measure to assess risk.

Gated Screening

In contrast to combining indicators to create a composite score, another approach involves first administering one measure to an entire student population, then selectively administering follow-up measures to those that are identified as at-risk on the first measure. This process is referred to as gated screening. The QuALITY lab has been engaged in several studies related to identifying the optimal combination of assessments to use in a gated framework, the optimal number of gates to employ when identifying risk, and the consequences of using alternate decision rules to determine who proceeds further in the assessment process.

Locally Derived Cut-Scores

Vendor provided cut-scores to signify risk may or may not be appropriate for a given school setting. If for instance the norm sample used to derive cut-scores by the test makers is vastly different from a population a school psychologist is testing, the likelihood of making inaccurate decisions is very high. As a result, one can estimate local cut-scores to signify risk using extant data. Members of the QuALITY lab have compared the performance of locally derived cut-scores to those provided by vendors in a number of studies. In addition, previous investigations have determined that locally derived cut-scores can be used with multiple cohorts over several years as long as the makeup of student demographics does not shift drastically. Drs. Van Norman, Klingbeil, and Nelson have created a worksheet that allows schools to derive local cut-scores and determine whether gated screening approaches make sense in their building. That worksheet can be downloaded here.

Please note that the QuALITY lab is always looking to partner with educators to address applied problems that they face (e.g., is this screener performing how we think it is performing?), and have collaborated numerous times in publishing those findings in peer-reviewed studies. Through working with local school districts in the Lehigh-Valley and beyond we hope to make inroads at addressing the research to practice gap. Please feel free to contact us if you would like help in evaluating your universal screening practices and pursue engaging in an applied research project.